Let's be honest—nobody wants to manually sift through thousands of customer reviews or competitor articles to spot key themes. It's a soul-crushing task. This is exactly where a keyword extractor from text comes in. Think of it as your automated assistant, built to instantly pull the most important terms and phrases from any block of text you throw at it.

Why You Need a Keyword Extractor

Imagine you've just launched a new product and the feedback starts pouring in. Instead of dedicating days to reading every single comment, a keyword extractor could show you in seconds that "battery life" and "screen brightness" are the hot topics. That's the magic right there—it turns messy, unstructured text into organized, actionable insights.

We've come a long way from simple word counters. Today's tools are smart enough to understand context, the relationships between words, and their semantic meaning, giving you results that are incredibly accurate. For any business, this is a clear competitive edge.

Real-World Business Applications

The practical uses are surprisingly broad and can benefit multiple teams at once. For instance, your marketing team could reverse-engineer a competitor's entire content strategy. Just feed their top-ranking articles into an extractor to see which keywords they're hitting hard. This gives you a data-backed roadmap for your own content.

It’s the same for an e-commerce brand. Analyzing thousands of product reviews can quickly highlight recurring complaints or features customers absolutely love. This creates a direct line to the customer's voice, helping you prioritize what to fix or what to feature in your next ad campaign.

The real benefit of a keyword extractor isn't just about saving time. It's about making better, faster decisions with data pulled directly from the source.

The Impact of Automation and Accuracy

The efficiency boost is huge. In fact, many teams report that keyword extraction cuts down their manual analysis effort by over 70%. This also speeds up the time it takes to get insights by up to 60%, letting you react to market trends while they're still relevant.

This shift from manual grunt work to smart, AI-driven analysis is a game-changer for a few key roles:

- Marketers: Can run competitor analysis on the fly and find content gaps in minutes.

- Developers: Can parse dense documentation or user bug reports to find the most critical terms.

- Data Analysts: Can summarize huge volumes of qualitative data without getting bogged down.

Ultimately, getting good at this is about working smarter, not harder. The role of AI in content creation and analysis is only getting bigger, making a keyword extractor from text a tool you can't afford to ignore. Learning how to use one well gives you the power to find the signal in the noise.

Comparing Keyword Extraction Methods

Picking the right keyword extraction method feels a lot like choosing the right tool for a home improvement project. You wouldn't use a sledgehammer to hang a small picture frame, right? In the same way, your best approach depends entirely on your goal. Are you after a quick, high-level analysis, or do you need a much deeper dive into the semantic meaning of a text? Getting a handle on the core differences between these methods is the first real step toward building a keyword extractor that actually works for you.

The world of keyword extraction has come a long way, moving from simple word counts to truly sophisticated, context-aware AI models. At first, the techniques were all about frequency. Then, things got a bit smarter, with methods that could weigh a word's importance not just in one document but across an entire library of them.

Statistical Versus Graph-Based Models

The classic statistical approach, and one you'll still see plenty of, is TF-IDF (Term Frequency-Inverse Document Frequency). Its logic is simple but surprisingly effective: a word is probably important if it shows up a lot in one document but is rare across all other documents. This is great for filtering out common but ultimately meaningless words like "the" or "and." It’s fast, reliable, and perfect for straightforward tasks like summarizing a batch of news articles.

But statistical methods like TF-IDF have a blind spot—they often miss the crucial relationships between words. This is where graph-based models, such as TextRank, really come into their own. Drawing inspiration from Google's famous PageRank algorithm, TextRank constructs a web of words, connecting them based on how often they appear near each other. In this system, words that are linked to other important words gain a higher score. This makes it fantastic for pulling out meaningful phrases, not just isolated terms.

Keyword extraction has evolved dramatically since the early 2000s. While early methods relied on basic statistical counts, by the 2010s, graph-based algorithms like TextRank gained traction, vastly improving our ability to find meaningful keywords without needing supervision. For a deeper look into the history of these NLP advancements, check out the GraphAware blog.

The Rise of Semantic Understanding

Modern approaches push things even further by zeroing in on semantic meaning. Instead of just counting or linking words, these methods use advanced AI to grasp the context and intent woven into the text. They can figure out that "CEO," "founder," and "business leader" are all related concepts, even if the text doesn't repeat those exact words. This is where the real magic of AI-powered text analysis happens. If you're curious about the models behind this, our beginner's guide to AI text generation is a great place to start.

To help you choose the best technique for your needs, here's a quick comparison of the most common methods. This table lays out their core principles and ideal use cases, giving you a clearer picture of which tool is right for your job.

Comparison of Keyword Extraction Techniques

| Method | Underlying Principle | Best For | Limitations |

|---|---|---|---|

| TF-IDF | Statistical. Scores words based on their frequency in a document versus their rarity across a collection of documents. | Quick topic spotting, document indexing, and information retrieval where speed is essential. | Ignores word order and relationships, struggles with synonyms and context. |

| TextRank | Graph-based. Models text as a graph where words are nodes and co-occurrence forms edges. Ranks words by importance within the graph. | Extracting meaningful multi-word phrases and key sentences. Good for document summarization. | Can be computationally intensive on very large texts. Performance depends on window size. |

| Embedding Models | Semantic. Uses deep learning to convert words/phrases into vectors that capture their meaning. Keywords are found by clustering these vectors. | Complex tasks requiring deep contextual understanding, topic modeling, and identifying conceptually related terms. | Requires significant computational resources (like GPUs) and pre-trained models. Can be a black box. |

Ultimately, the right method comes down to balancing your need for speed, accuracy, and contextual depth. For many projects, a hybrid approach might even be the best solution.

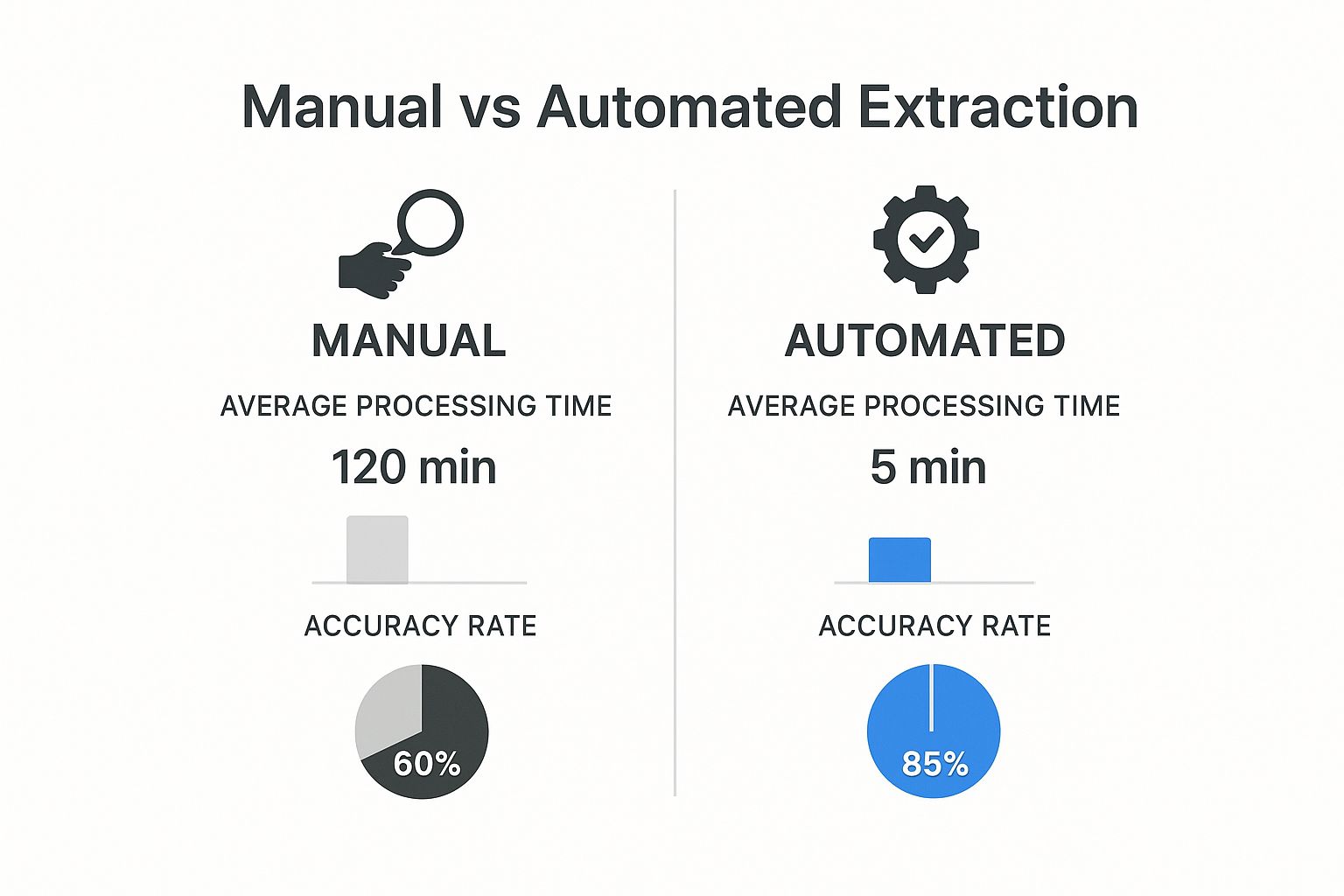

The infographic below really drives home the difference between doing this work by hand versus using an automated tool.

As you can see, automated methods provide a massive leap in both efficiency and precision. They can slash processing time from hours down to mere minutes while hitting an impressive 85% accuracy rate. This kind of performance boost is exactly why automated tools have become essential for anyone serious about data analysis today.

Getting Your Python Environment Ready for Text Analysis

Before we can start pulling keywords out of text, we need to get our digital workshop in order. A clean, properly set up Python environment is the bedrock of any serious text analysis project. It's a non-negotiable first step that saves you from a world of headaches later by making sure your code runs predictably and you have the right tools for the job.

The first thing we'll do is create a virtual environment. I can't stress this enough. Think of it as a pristine, isolated sandbox just for this project. It keeps all the specific Python packages we're about to install neatly contained, preventing them from messing with other projects on your machine. This is a pro-level habit that prevents frustrating version conflicts down the line.

Just open your terminal and run this:

python -m venv keyword_env

source keyword_env/bin/activate # On Windows, it's keyword_env\Scripts\activate

Once you see (keyword_env) in your terminal prompt, you're in. Now we can start installing our tools.

Installing the Core NLP Libraries

With our sandbox active, we'll use pip (Python's package manager) to grab three essential libraries. Each one plays a unique and critical role in our keyword extraction pipeline.

- NLTK (Natural Language Toolkit): This is one of the original workhorses of NLP in Python. We’ll lean on it for fundamental tasks like tokenization (splitting text into individual words) and, most importantly, for its built-in list of stopwords.

- spaCy: A powerful and incredibly fast library built for real-world, production-level NLP. We'll use spaCy for more sophisticated analysis, like identifying the part of speech for each word (is it a noun, a verb, etc.?), which is key to finding meaningful terms.

- scikit-learn: This is a titan in the machine learning world. While it can do almost anything, we only need a tiny piece of its power—specifically, its robust tools for statistical text analysis, like TF-IDF.

You can install them all in one go with this simple command:

pip install nltk spacy scikit-learn

This command tells pip to find and install the latest stable versions of these packages right into our keyword_env environment.

A quick tip from experience: a common rookie mistake is to skip the virtual environment and install packages globally. This inevitably leads to what we call "dependency hell," where Project A needs version 1.0 of a library, but Project B needs version 2.0. Always, always activate your virtual environment first.

Grabbing the Necessary Language Data

Just installing the libraries isn't quite enough. Both NLTK and spaCy need some extra data files to do their magic—things like pre-trained language models and resource lists.

First, for NLTK, we need to download its list of stopwords. These are common, low-value words like "and," "the," "is," and "in" that just add noise. Filtering them out is a simple but powerful preprocessing step that dramatically improves the quality of our results.

Next, for spaCy, we need to download a language model. These models are the "brains" of the operation, pre-trained on enormous amounts of text to understand grammar, syntax, and context. We'll start with the small, efficient English model.

Run these final commands in your terminal:

python -m spacy download en_core_web_sm

python -c "import nltk; nltk.download('stopwords'); nltk.download('punkt')"

Once those downloads finish, you're all set. Your environment is fully configured with the libraries installed and the language data ready to go. We've built a solid foundation, and now we're ready to start writing some code.

Let's Get Practical: Extracting Keywords with Python

https://www.youtube.com/embed/ZOgrhn2Uq0U

Theory is one thing, but rolling up your sleeves and writing code is where the real learning happens. Let’s build our first functional keyword extractor from text using Python and a popular library called the Natural Language Toolkit (NLTK). We'll go from a basic setup to running code that actually works.

Imagine you run an e-commerce store and you've just received a new customer review for a pair of wireless headphones. It reads:

"The sound quality on these headphones is brilliant for the price, truly immersive. However, the battery life is a major disappointment. It barely lasts a full workday, and the charging time is incredibly slow. The build quality feels solid, though."

Manually reading every review is impossible as you scale. Our goal here is to automatically pinpoint the core topics: "sound quality," "battery life," and "charging time." This is a perfect job for an automated keyword extractor.

Building a Simple Frequency-Based Extractor

We're going to start with a classic, straightforward approach: counting word frequency. The core idea is simple but powerful—important words tend to appear more often than less important ones.

To do this, our code will perform a few essential Natural Language Processing tasks:

- Tokenization: This just means breaking the review text down into a list of individual words, or "tokens."

- Stopword Removal: We'll filter out common, low-value words like "is," "a," and "the" that don't add much meaning.

- Frequency Counting: Finally, we'll tally up how many times the remaining, meaningful words appear.

Here’s the Python code that puts it all together.

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from collections import Counter

Sample text from a product review

text = """

The sound quality on these headphones is brilliant for the price,

truly immersive. However, the battery life is a major disappointment.

It barely lasts a full workday, and the charging time is incredibly slow.

The build quality feels solid, though.

"""

Tokenize the text and convert to lower case

tokens = word_tokenize(text.lower())

Get the English stopword list

stop_words = set(stopwords.words('english'))

Filter out stopwords and punctuation

filtered_tokens = [

word for word in tokens

if word.isalpha() and word not in stop_words

]

Count the frequency of each word

word_counts = Counter(filtered_tokens)

Print the 5 most common keywords

print(word_counts.most_common(5))

When you run this script, it will spit out the most discussed topics from the review, giving you instant, actionable feedback. The real power comes from scaling this. You could analyze thousands of reviews in seconds, a task that would take a human team days.

This is just one example of how automation can streamline your work. If you're interested in going further, you can find other ways to automate content creation with AI and make your workflows even more efficient.

Interpreting the Output and Taking Action

What we just did touches on several key areas of Natural Language Processing, as this high-level overview from Wikipedia shows.

Our script performed lexical analysis (tokenization) and a basic form of syntactic analysis (filtering stopwords). We’ve effectively turned messy, unstructured text into a clean, structured list of keywords.

This structured data is what allows you to make smart business decisions. For our headphone review, the output immediately flags "battery" and "quality." This tells your product team exactly where customers are focused, guiding their efforts for future improvements. It’s a direct line from customer feedback to product strategy, all powered by a few lines of code.

Moving Beyond Word Counts: AI-Powered Semantic Extraction

Simple frequency counters are a decent starting point, but if you want to really understand what a piece of text is about, you have to go deeper. This is where AI-powered semantic analysis completely changes the game. Instead of just tallying words, a semantic keyword extractor from text gets a feel for the meaning and context behind them, almost like a human reader would.

This method relies on heavy-duty libraries like spaCy and powerful pre-trained models, such as those from Hugging Face. These models have been fed massive amounts of text, so they've learned to connect the dots. They inherently understand that terms like "CEO," "founder," and "company leader" are all pointing to the same core concept, even if they aren't the same exact words.

What you get is an extraction of core themes and ideas, not just a list of the most repeated words.

The Semantic Advantage in Practice

Let's go back to that product review for the wireless headphones. A basic counter might spit out "battery," "quality," and "sound." That’s okay, but a semantic model paints a much richer picture.

It can pinpoint concepts like "poor battery performance" or "superior audio experience" because it understands how adjectives, nouns, and the sentiment behind them all work together. This is a huge leap forward, and it's why semantic extraction is becoming the go-to for any serious text analysis. You're no longer just getting a word list; you're getting a map of the ideas driving your content.

The progress here is genuinely impressive. Some modern systems can encode text into a 'semantic fingerprint'—a unique digital signature of its meaning. This allows algorithms to measure the conceptual overlap between a term and the entire document, which has led to some major accuracy boosts. We're talking precision improvements of 10-15% over older methods, especially with complicated global datasets. You can play around with this idea using some of the free keyword extraction tools from Cortical.io.

When Should You Use Semantic Extraction?

So, do you always need the extra computational horsepower of a semantic model? Not necessarily. It’s all about picking the right tool for the job.

You'll want to reach for a semantic keyword extractor when:

- Context is King: Your analysis hinges on understanding nuance, sentiment, or complex ideas. This is absolutely critical for sifting through customer feedback, legal documents, or dense technical reports.

- You're Focused on Topic Modeling: The goal isn't just to find keywords but to uncover the main themes or topics across a huge collection of documents.

- Synonyms and Related Terms Matter: The text you're analyzing uses a lot of varied language, and you need a tool smart enough to know that "fast shipping" and "quick delivery" refer to the same thing.

For a quick-and-dirty analysis of straightforward text, a simpler frequency or TF-IDF model might be all you need—and it will be much faster. The trick is to match the sophistication of the tool to the demands of your project. It's a strategic choice, similar to how you’d use different tools to automate content creation for SEO; you select the specific feature that aligns with your immediate goal.

Frequently Asked Questions About Keyword Extraction

As you start working with keyword extractors, you're bound to have some questions. It’s a powerful technique, but it definitely has its quirks. Getting straight answers to these common questions can save you a lot of headaches and help you get the results you're after.

Let's dig into some of the things people ask us most often.

What Is the Difference Between Keyword Extraction and Keyword Research?

This is a big one, and it's easy to get them mixed up. At its core, the difference is about looking inward versus looking outward.

Keyword extraction is all about analysis. You take a piece of text that already exists—like a blog post, a customer review, or a research paper—and the tool automatically pulls out the most important terms and concepts from that specific document. It tells you what the text is about.

On the other hand, keyword research is about discovery. It’s the process you go through to find new keywords and phrases people are searching for online. This helps you figure out what content you should create in the future to attract an audience.

Think of it this way: Extraction analyzes the article you just finished reading. Research helps you decide what article to write next.

How Do I Choose the Right Extraction Model?

There’s no magic bullet here. The "best" model really comes down to your specific goals and the kind of text you're working with.

Here’s how I usually decide:

- For speed and simplicity: If I just need a quick-and-dirty overview of a clean, straightforward document, a statistical model like TF-IDF is perfect. It’s fast, doesn't require a ton of computing power, and gets the job done for basic topic identification.

- For depth and context: When the details matter—like understanding sentiment or abstract ideas—I'll always reach for a semantic model. Tools built on spaCy or a Hugging Face transformer are the heavy hitters. They demand more resources but provide incredibly accurate and nuanced insights that simpler models just can't see.

Can I Use a Keyword Extractor on Any Language?

Yes, but you have to be careful. The capability isn't automatic; it hinges entirely on the library and the specific pre-trained model you select.

Most of the major NLP libraries, including spaCy and NLTK, have multilingual support. The key is that you must intentionally download and load the correct language model for the text you're analyzing. Running a German text through an English-only model will give you garbage results.

Performance also varies. A model that has been trained on a massive English dataset will almost always outperform one trained on a smaller dataset for a less common language. Before you commit, always check the library's official documentation to see what languages are supported and what to expect.

How Accurate Is an Automated Keyword Extractor?

Accuracy is a moving target, not a single number. It's heavily influenced by the extraction method you use and, just as importantly, the quality of your input text.

A simple statistical method might hit 70-80% accuracy on a well-written, clearly structured article.

More advanced semantic models can get you above 90% accuracy because they go beyond just counting words. They actually understand the relationships between words and the overall context.

But here’s the most important tip I can give you: no model, no matter how sophisticated, can do its best work with messy data. The single most effective thing you can do to improve accuracy is to properly preprocess your text. Taking a few moments to clean up the text by removing stopwords and applying lemmatization will consistently deliver better keywords. If those terms are new to you, our guide on AI content creation for beginners is a great place to start.

Ready to move from theory to action? Stravo AI provides a full suite of AI tools, including advanced text analysis capabilities, to help you extract valuable insights from your documents in seconds. Stop guessing and start making data-driven decisions. Explore Stravo AI today!